Power BI Git Integration for BIOps and Version Control

Power BI Analysts vs Power BI Developers

Five years ago, we were mostly referred to as Power BI analysts but today companies want Power BI developers. In today’s business world, a tightly coupled data and IT units is a must-have for organizations. That is the only way they can achieve proper enterprise data management, data governance, cybersecurity, efficient IT operations and genuine business intelligence.

More organizations are now having a BI unit within the core IT and technology departments. At first, it started as a way to create organization-wide management reports that would replace traditional DB platform add-on BI reports. Now, it has become the only way to do proper data engineering in large companies. Business units with little incentive to think beyond the needs of their units and often no proper data engineering knowledge, end up creating BI reports that are mostly Excel reports in Power BI clothing. They have lots of ad-hoc data coming from static files and all the data processing is done within Power BI. This ends up leading to every report builder doing their own end-to-end modeling from their own copies of the source data with no real collaboration nor central data warehouse. And like I said, the results become Excel reports in Power BI clothes.

Today, Power BI specialists must act like developers and not just as analysts. They must understand that they are not replicating Excel and PowerPoint in Power BI, but are part of a development team that uses the output of other team members to create reporting and analytics artifacts that others can reuse. Ditto, Power BI developer.

A Power BI developer must work with data engineers to ensure that data transformation, storage, and enrichment happen outside of Power BI. And that there is as little replication of modeling as possible across the Power BI reports. They must maximize the use of datamarts, dataflows and shared semantic models to create a better governed and centralized modeling as possible. They must build Power BI reports that follow software development best practices - well documented, consistent design patterns, use of development to test to production workspace pipelines, and proper version control.

Now you know the difference between a Power BI analyst and a Power BI developer. We are going to focus the remainder of this post on the way a Power BI developer can achieve proper version control.

Power BI Native Git Integration

Version control has been a big need within the Power BI expert community with different experts coming up with interesting ways to automatically store old copies of Power BI reports as one updates the reports and a way to revert to previous versions if needed. The approach that gained the most traction was the use of a SharePoint folder as a staging folder that Power BI pulls a report to be published from. This leverages the inbuilt versioning within SharePoint but without the descriptive comments, smaller footprint and changelogs that a git-based version control system provides.

And remember what I said about a Power BI developer aligning with modern software development practices. Well, modern development practices go beyond just versioning to also having a peer code review process, team collaboration and an automated deployment process. All these were not possible with SharePoint or any of the common hacks people came up with.

Previously in Power BI, it was not possible to achieve CI/CD.

Now, if you have a premium capacity or Fabric capacity within Power BI service, you can access a native git integration. You can now have a proper modern versioning system and eventually set up full CI/CD (continuous integration and continuous delivery).

Power BI + Azure Repos + Git + Visual Studio Code = Native Version Control and CI/CD

Setting up Power BI Git

To set up git in Power BI, we need four tools: Premium or Fabric Power BI workspace, Azure Repos, git and Visual Studio Code.

We start by creating a new premium or Fabric capacity workspace. You can also use an already existing one.

Fabric or premium workspace creation

Once the workspace is created. The next immediate step is to connect the workspace to Azure DevOps repo branch. And for many people, it means first creating an Azure DevOps project and the branch to connect Power BI to. You can easily achieve this by heading to https://dev.azure.com/ Sometimes, it gives you the runaround to the same signup/login page. Make sure you eventually get to a page that looks like this below or where you are asked to create an organization. Then create a project to house your Power BI resources. I called mine Fabric I as intend to do all things Fabric within the project.

Azure DevOps Project

Within the newly created project you can initialize a default main branch and plan to use that to sync with Power BI service. Alternatively, after initializing a main branch, you can create a new branch (maybe call it dev-pbi branch) and use that. In my case, I only initialized a main branch and would go ahead to connect that with Power BI service.

Initialized a main branch

We then head back to Power BI service, to the settings page of the workspace we want to use, and set the Git integration section to correctly point to the new branch I created. If you are unable to see your organization and project, there is a high chance that your Power BI or Fabric tenant is in a hosting region different from the one your Azure DevOps is in.

The fix is an easy one. Go to your Power BI admin portal, then tenant settings, scroll way down to the Git Integration section. Then, enable “Users can export items to Git repositories in other geographical locations”.

Once you click on Connect and Sync, the workspace begins showing a Source Control status that opens up a Source Control pane on the right when clicked on.

Now we will publish a Power BI report into that workspace. And then see how from that moment onwards we would no longer have to hit the publish button nor work with a binary .pbix file anymore. After that first publish, we will begin to use the source control to manage updates and use Visual Studio Code to locally access the Azure Repos branch, and even make code level edits to the new project folder we will begin to work with (in place of the .pbix binary file we published).

Head to Power BI service and instantly we will see the Source Contol status showing that we have 2 updates, which upon clicking on will open a pane showing the published report and its semantic model. And now we can do our first sync from Power BI service to Azure DevOps.

Then, on heading over to Azure DevOps, you will see the main branch now showing the report and semantic model folders which represents the published work.

Congratulations on getting this far!

We are done with setting up the Power BI service and Azure Repos. We are now left with connecting our local computer to the Azure Repos to allow us complete the updates cycle - ability to update in any platform and see the updates reflect on all platform. Right now updates in either the Power BI service or the Azure Repos can sync but whatever we do on those two platforms won’t sync with the copy of the report on our local computer.

Achieving the sync on our local computer is actually easy. We would need to install Visual Studio Code and git. Once you have done that, then you have just one more major step to take - clone the repo on your local. This is made a single-click process within Azure Repos, just go to the branch and click on Clone. If you prefer to use a different IDE or code editor than Visual Studio Code, you can as well.

Once the cloning is done, open the folder and you’ll notice the same file structure as previously seen on Azure Repos. From now on, to launch and update the Power BI report, you will open the .pbir file in the Report folder.

You will need to hit refresh on the first open as the data do not sync via git so as to keep file size small across the syncs since the data is what makes up the bulk of most Power BI reports.

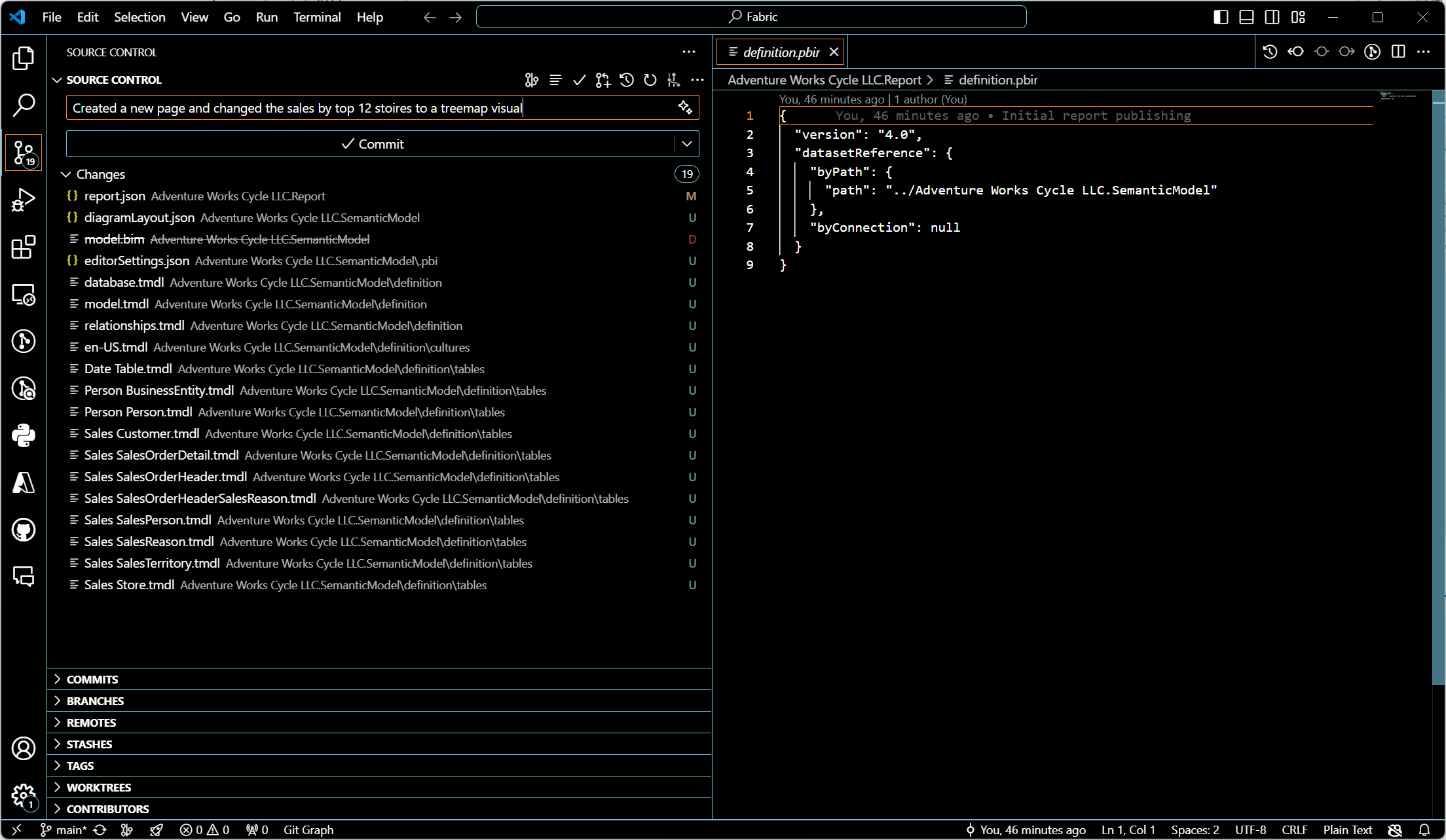

You can go ahead to make a change to the local copy you have now opened. For instance, duplicate the Sales Report page and change the bottom visual to a treemap. Save and see the git within Visual Studio Code light up with change tracker and a prompt to commit the changes to local repo and then sync with the remote repo (Azure Repos). If you get a message to upgrade the model format to a TMDL format, accept the upgrade suggestion. That changes the main model format from a single model.bim file into a more meaningful folder of .tdml files that reflect the different tables you have in the report.

Now on to the magic part!

Without hitting publish button in Power BI, if we go to Power BI service we will see the updates. And that’s how going forward we no longer need the publish button and multiple Power BI developers can work collaboratively on a Power BI report without fear of making clashing changes.

You can also go ahead to edit the report within Power BI service and see the changes propagate to your local PC without having to download/export the .pbix file.

And that brings us to the end of this interesting demo. I hope you use this more in your Power BI development processes. And if you are ambitious, you can take it further by setting up a proper deployment pipeline in Azure Repos. Check this documentation page from Microsoft to get guidance on that: https://learn.microsoft.com/en-us/power-bi/developer/projects/projects-build-pipelines

You can read more about the different artifacts within the Power BI solution folder which is the new workspace for your Power BI report creation and updates:

Don’t forget to subscribe to our newsletters to never miss out of the valuable tutorials and posts we make. Enjoy your week and remember to ditch the analyst mindset for a developer mindset!